Scene Change Captioning

-

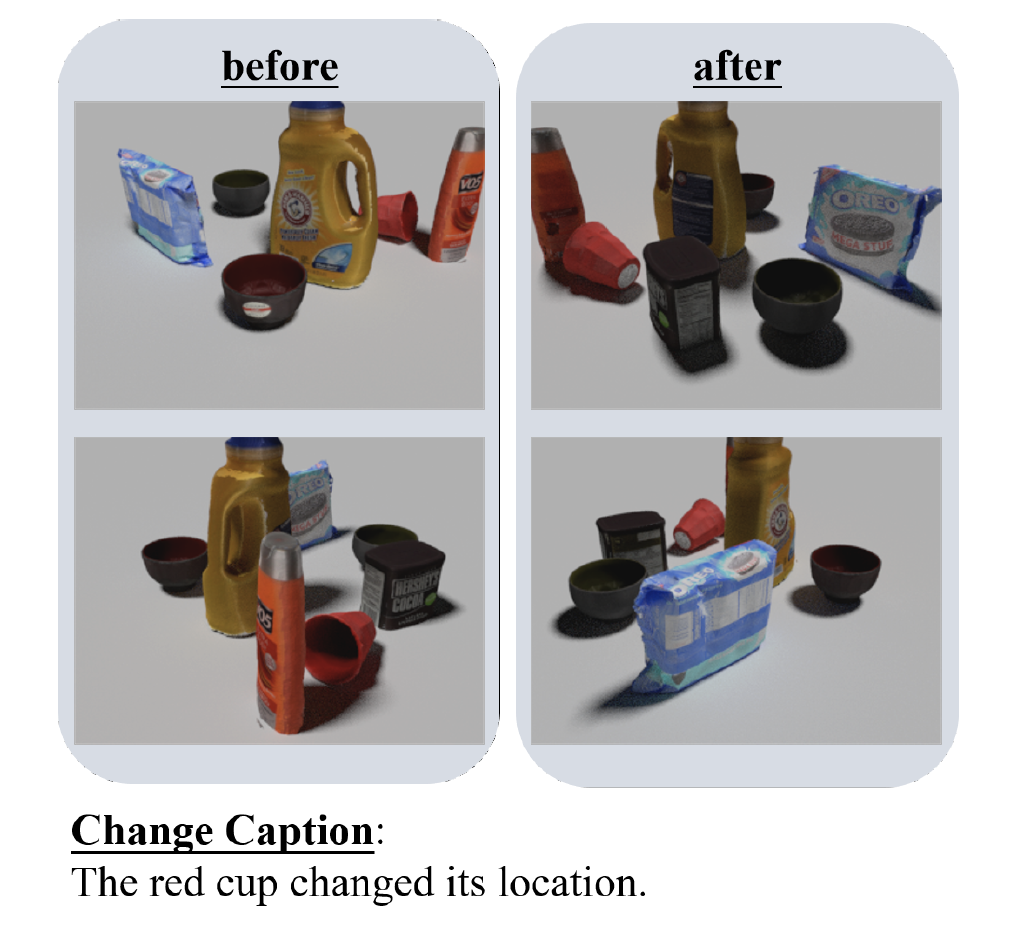

We can describe a change area in a real environment.

In this letter, we propose a framework that recognizes and describes changes that occur in a scene observed from multiple viewpoints in natural language text. The ability to recognize and describe changes that occurred in a 3D scene plays an essential role in a variety of human-robot interaction applications. However, most current 3D vision studies have focused on understanding the static 3D scene. Existing scene change captioning approaches recognize and generate change captions from single-view images. Those methods have limited ability to deal with camera movement, object occlusion, which are common in real-world settings. To resolve these problems, we propose a framework that observes every scene from multiple viewpoints and describes the scene change based on an understanding of the underlying 3D structure of scenes. We build three synthetic datasets consisting of primitive 3D object and scanned real object models for evaluation. The results indicate that our method outperforms the previous state-of-the-art 2D-based method by a large margin in terms of sentence generation and change understanding correctness. In addition, our method is more robust to camera movements compared to the previous method and also performs better for scenes with occlusions. Moreover, our method also shows encouraging results in a realistic scene-setting, which indicates the possibility of adapting our framework to a more complicated and extensive scene-settings.

-

投稿先

RA-Letters with IROS presentation

-

メンバー

Yue Qiu (Tsukuba/AIST), Yutaka Satoh (AIST), Ryota Suzuki (AIST), Kenji Iwata (AIST), Hirokatsu Kataoka (AIST)